While everyone debates whether AI will replace developers, we’re missing the more interesting story. We’ve built the most sophisticated paste machine in human history and convinced ourselves it was thinking.

Google helped us search through the global knowledge base. YouTube helped us learn from others. Stack Overflow helped us debug our own bad decisions. GitHub gave us access to code, but you had to understand it, adapt it, debug it. Stack Overflow was paste plus comments, and you still had to figure out if the useful answers applied to your situation.

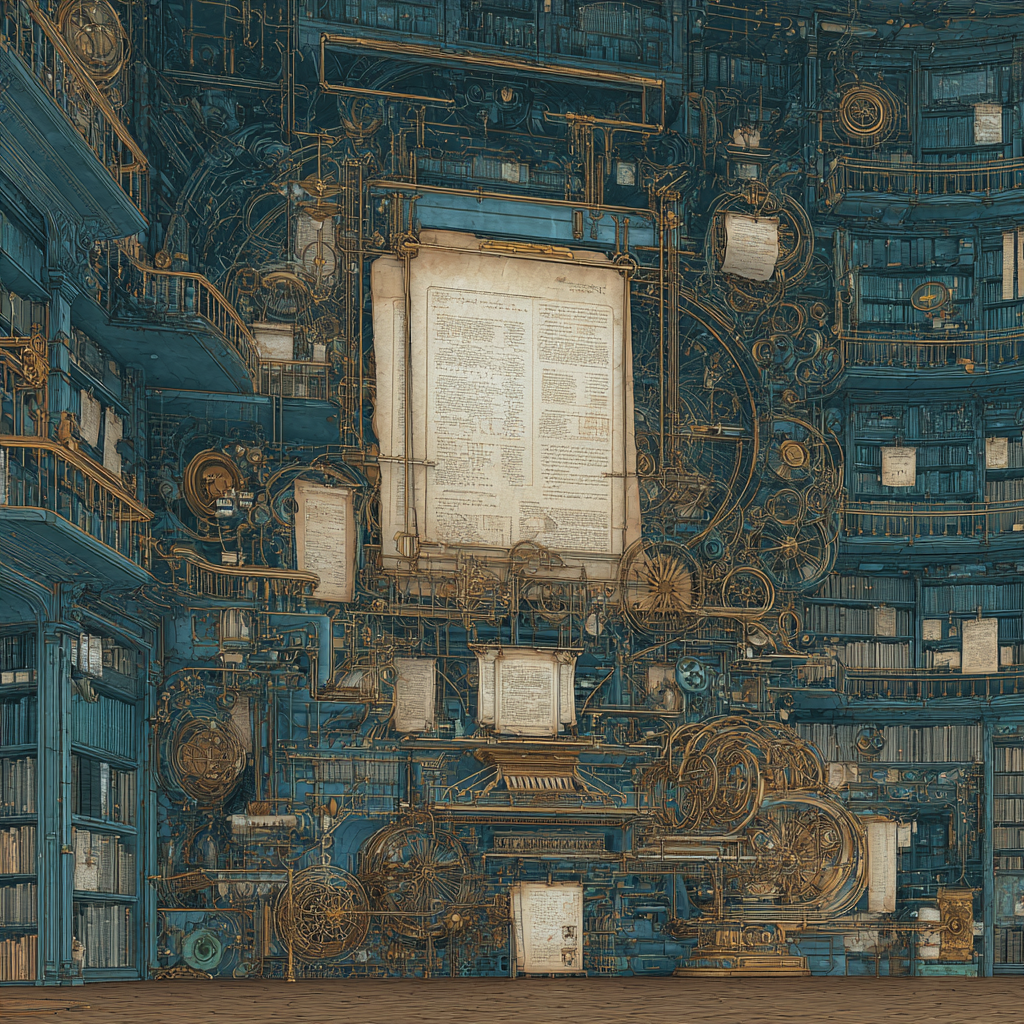

LLMs skip the entire scavenger hunt. They take that authentication pattern from 2023, that payment flow from a Berlin startup, that error handling from Python’s style guide, and seamlessly reformat it all into exactly what you need, in your language, with your variable names, for your specific context.

Every response is custom-tailored plagiarism. And I mean that as the highest compliment.

The model is not thinking. The discovery of attention required training on the largest corpus available, and it scaled better than anyone expected. So we fed it everything. All of written knowledge. Every blog post, every code snippet, every Stack Overflow answer, every GitHub repo that wasn’t locked down. We accidentally mutualized everyone’s intellectual property in the name of making the numbers go up. Now we have access to everyone’s pastebin ever, minus whatever’s behind proprietary walls. Next token prediction is not intelligence. It’s statistical machinery operating at a scale that masks its own simplicity. But the machine is incredible and the wonder is justified. I’m no luddite.

When we one-shot the sort of website that used to take a team weeks, we should not pretend we don’t see what this is. It might be genius that it’s possible, but it’s also the usurpation of everything we ever published, scraped, shared, or shipped.

- web 1 → infinite read

- web 2 → infinite read-write

- web 3 → infinite read-write-own

- ai 1 → infinite pastebin

- ai 2 → infinite remix

- ai 3 → infinite agency?

The Paste Machine Discovery

The LLM retrieves solutions quickly, combines them quickly, and iterates quickly with agentic loops in ways that create new solutions. It still sucks at true “reasoning” through a problem, but its pattern-matching and mimicry at scale becomes functionally equivalent to reasoning. That gives us a decade worth of work.

Here’s what matters for business: The machine can search any solution humanity has ever found, customize it perfectly for your specific needs, and combine solutions in ways that create new ones. Whether you call it intelligence or sophisticated paste is somewhat irrelevant - it’s not perfect and AGI will take some time so you better get to work with what you have.

The New Strategic Framework: Paste vs. Intelligence Tasks

Companies need a clear framework for AI deployment. Based on patterns emerging from early enterprise adoption, three categories have become clear:

Paste-Optimal Tasks

These are where AI excels and should be deployed immediately:

- Integration work: Connecting APIs, handling authentication flows, payment processing

- Code adaptation: Taking existing solutions and customizing for new contexts

- Pattern application: Implementing known architectural patterns with company-specific requirements

- Boilerplate generation: Creating repetitive code structures with proper error handling

Organizations report that integration development that previously took senior engineers weeks can now be completed by junior developers in days when AI handles the boilerplate and adaptation work.

Intelligence-Required Tasks

These still need human reasoning and shouldn’t be fully automated:

- Novel problem solving: First-time challenges with no existing patterns

- Strategic architecture decisions: System design trade-offs with long-term implications

- Edge case handling: Unusual scenarios that require judgment calls

- Business logic definition: Domain-specific rules that require deep context

When companies build new multi-tenant isolation systems or design novel data architectures, the strategic decisions around partitioning and boundaries require deep technical judgment. But implementing those designs? That’s paste-optimal work now.

Hybrid Zones

The most interesting opportunities combine paste and intelligence:

- Rapid prototyping: AI handles boilerplate, humans design novel interactions

- Code review: AI catches common issues, humans evaluate architectural decisions

- Documentation: AI drafts technical docs, humans ensure strategic clarity

- Testing: AI generates test cases, humans design test strategy

The companies winning with AI aren’t replacing humans—they’re optimizing this division of labor.

What Happens to Software Itself

Traditional software becomes the substrate that gets remixed. Every app, every feature, every interaction pattern ever built becomes part of the paste buffer. Users won’t need to know where the paste came from.

Major platforms understand this. Salesforce’s AgentForce isn’t trying to replace salespeople—it’s giving every salesperson access to the collective knowledge of every top performer who ever closed a deal. Same with ServiceNow’s workflow automation and Microsoft’s Copilot integrations. They’re building paste machines for domain-specific expertise.

The boundaries between applications dissolve when any capability can be summoned and reshaped on demand. But someone still needs to create the original patterns that get pasted. Someone still needs to push the boundaries, create the new solutions that tomorrow’s AI will remix.

What Remains Human

This is why AI hasn’t made programming obsolete, just different. The truly novel problems—the ones nobody has solved before—still require that grinding, incremental push forward. AI can instantly get you to where humanity left off yesterday, but that last mile? That’s still on us.

The transistor was a stupidly simple device. A switch that could be turned on and off with electricity. That’s it. But stack enough of them together, arrange them in the right patterns, and you get everything from the Apollo guidance computer to the phone in your pocket. The simplicity of the building block had nothing to do with the sophistication of what we built with it.

Next token prediction might be the transistor of intelligence. A simple mechanism that, at scale, lets us tame information the way transistors let us tame electricity. We’re probably still in the vacuum tube era of this thing, fumbling around, building room-sized machines to do what will eventually fit in our pockets. But the trajectory is clear. And if you’re not in awe of what’s coming, you’re not paying attention.